VRSG Features

MVRsimulation Virtual Reality Scene Generator (VRSG) supports the typical features required for image generators used in flight, ground vehicle, and infantry training simulators, and many other military simulation applications. Image generators are typically driven by users’ simulator host model, such as a flight model. VRSG renders a virtual world as it is specified by host parameters such as location and field-of-view.

- Asynchronous texture paging technology for visualizing high-resolution, photo-realistic databases at 60 Hz; can address up to 2 TB of texture in real time.

- Database geometry paging, level-of-detail blending, decoupled terrain and texture level-of-detail.

- Dynamic lighting, which uses per-vertex color, blended with per-polygon material, combined with ambient lighting conditions and directional light sources for efficient and convincing dynamic lighting effects.

- Ephemeris model for sun and moon position, moon phase, and light-point based star fields. Time options with which VRSG can dynamically set the position of celestial bodies in the sky and the light source angles.

- Volumetric ray-traced cloud system and storm cells with optional volumetric precipitation effects.

- Multiple atmospheric layers including ground fog and haze with sun-angle dependent density and color.

- 3D ocean simulation, featuring realistic wave motion, 12 Beaufort sea states, 3D wakes, vessel surface motion, accurate environment reflections, and support for bathymetric data for shoreline wave shape and opacity.

- Multi-texture techniques such as normal maps, shadow maps, light maps, and decals.

- Model-edge anti-aliasing for cases where FSAA is not an option due to its resource usage or performance impact.

- Object-on-object dynamic shadowing for applications such as tanker refueling.

- Shadows cast by dynamic entities, cultural features, and volumetric clouds. Screen space ambient occlusion.

- Full-featured high-performance light points that respond realistically to visibility conditions.

- Support for potentially up to thousands of independent, concurrent, steerable light lobes with per-pixel axial and radial attenuation for video cards that support Pixel Shader Model 5.0.

- User-extendable particle effects that respond to wind: dust trails, contrails, rotor wash, tactical smoke, smoke plumes, blown sand or snow, and explosions.

- Dynamic cratering, deforming terrain surfaces to represent craters resulting from munitions impact.

- Conversion utilities for FBX models and OpenFlight databases and models.

- Full mission function support to meet the needs of ground-based vehicle simulators to fast moving fixed-wing aircraft.

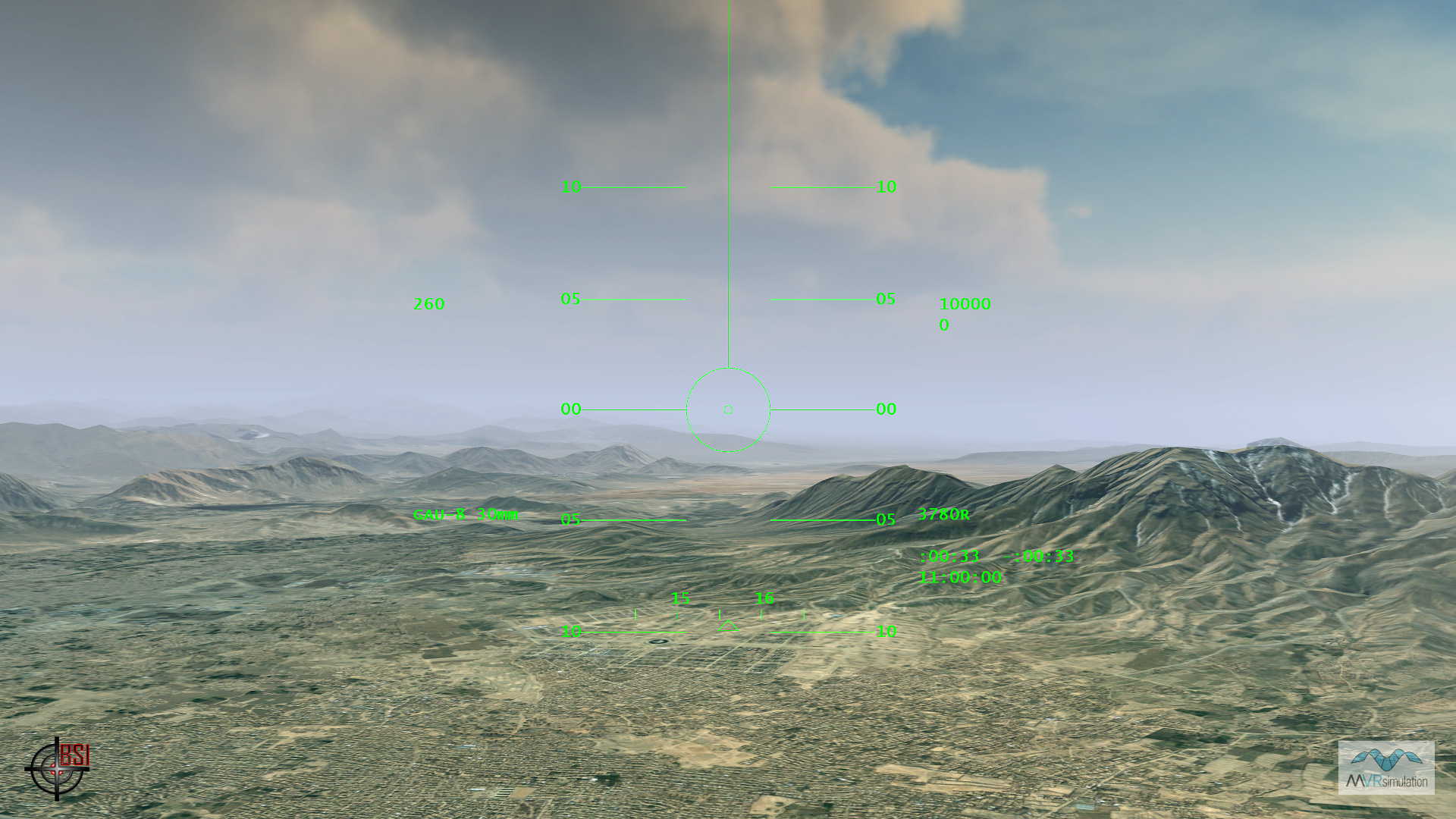

- Mission rehearsal requirements such as the ability see long distances (that is, far horizon) and process large amounts of geospecific imagery draped upon terrain elevation data.

- Significant Common Image Generator Interface (CIGI) support for communication between an image generator and host device in simulations.

- Native high-performance 3D human character render engine; no third-party software required. In addition, VRSG First Person Simulator (FPS) controls your character for viewing the scenario from the character's point of view.

- Ability to exploit a multiple-CPU computer to support asynchronous paging of both terrain geometry and texture.

- Support for synchronized multiple channels and multiple viewports per channel. Multiple viewports on a single visual channel may be overlapped or spatially disjointed.

- Multiple mechanisms for adding 2D overlays to the 3D display.

- Edge blending and distortion correction support of third-party solutions such as Scalable Display Technologies and VIOSO.

- Multiple degree-of-freedom hardware tracker support for controlling the position and/or orientation of the VRSG viewpoint.

- Physics-based IR simulation featuring real-time computation of the IR sensor image directly from the visual database, without the need to store a sensor-specific database. Includes a radiance-based automatic gain control (AGC), manual level/gain override, and noise as a function of dynamic range. Mid-range and far IR wavebands are supported to model thermal imagers.

- Notional sensor features include dynamic hot-spots, user-controlled intensity as a function of material and time-of-day.

- Post-processing effects to include noise, blur, depth-of-field, level, gain, polarity, digital zoom, and AC banding.

- Electro-Optic (EO) simulation mode.

- Night Vision Goggle (NVG) stimulation modes.

- Radar simulation to support applications such as F-16 DRLMS, SAR, and ISAR, displays for UAVs, or similarly equipped platforms.

A viewport is a scene rendered in a VRSG channel. A single VRSG channel can be divided up into multiple viewports, with each viewport assigned to a different view of the scene. Multiple viewports on a single VRSG channel may be overlapped or spatially apart. The viewports can be horizontally mirrored to support applications that demand this (such as a rear-view mirror), or display systems whose optics impose a horizontal reversal of the image.

Applications with multiple viewports from one VRSG instance include:

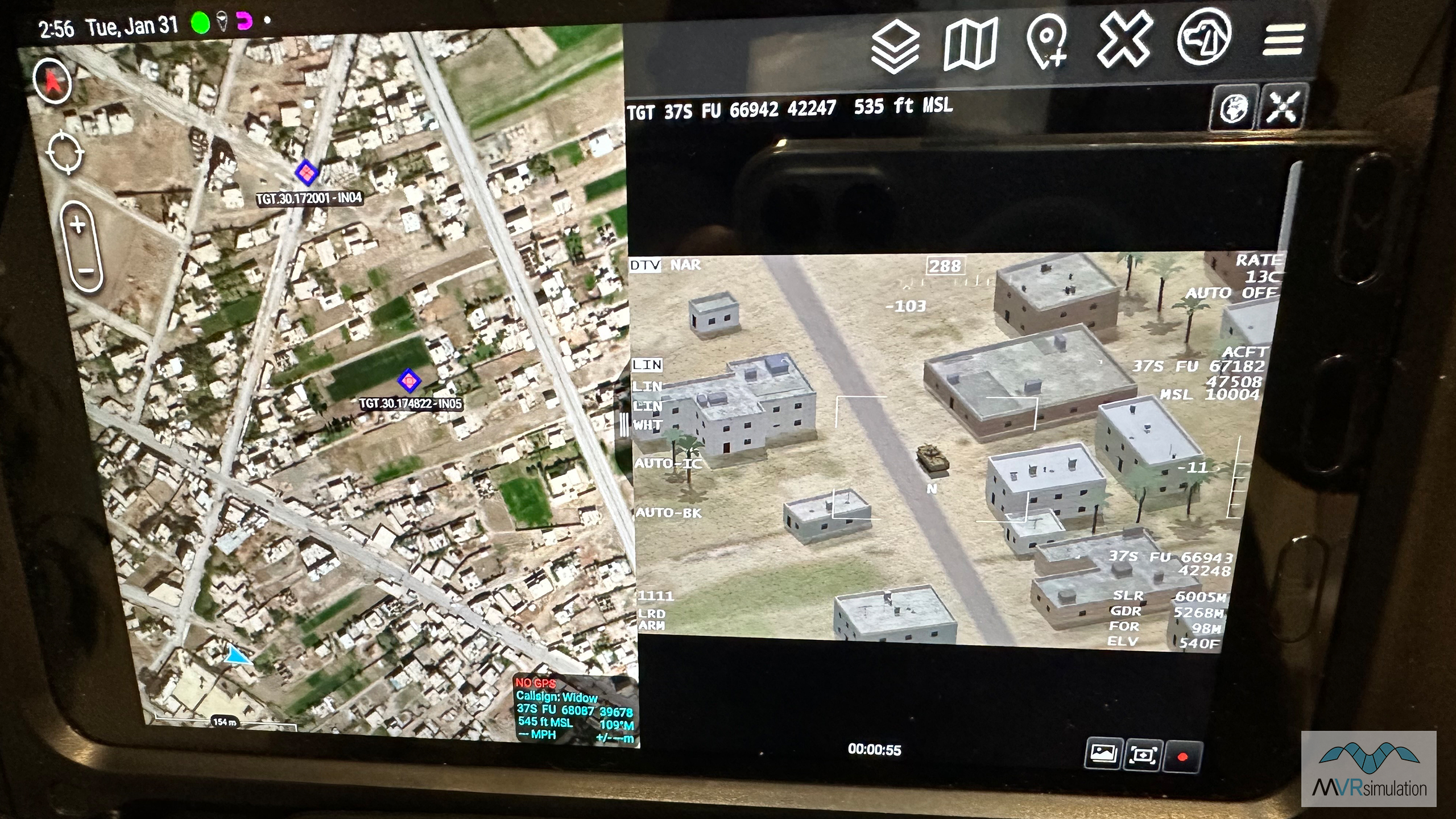

- Picture-in-picture or picture-by-picture arrangements.

- UAS simulation, where often the nose camera and the articulated sensor camera are each given a dedicated viewport.

- VR HMD devices, which require at least two viewports (one for each eye) or, in some cases, four viewports (two for each eye), depending on the manufacturer.

- Two or more projectors on a dome display; one viewport could be allocated to a skyward scene, and the other to a denser terrain scene, as a way of achieving some load balancing.

- Two monitors connected with the Nvidia Surround feature; two viewports at the monitor boundaries enables you to have different scenes on each monitor/projector.

VRSG license pricing is structured on a per-viewport basis; a single viewport, two or three viewports, and four or more viewports. (See the Price List for more information about VRSG viewport pricing.)

- Support for the MUSE VIDD V2.4 for high-fidelity UAV training.

- Built-in UAV sensor payload model allowing any DIS airborne platform to be used as a UAV, for situations when a notional UAV will suffice for your training needs.

- Real-time HD H.264 / .265 video generation with embedded KLV metadata. VRSG supports the KLV encoding of UAV telemetry in a compliant subset of NATO standard STANAG 4609 to include MISB 0601.1 and 0601.9 metadata, and MISB security metadata standard 0104.5.

- MVRsimulation's own Video Player, which is optimized for VRSG H.264/H.265 streaming and can decode and display KLV metadata.

- Built-in 2D overlays for several UAV / RPA platforms (available in U.S. domestic release only).

- Laser rangefinder/designator mode for designating targets for other simulations.

- NVG IR pointer mode for night-time target marking.

- Stimulate any device capable of receiving full motion video (FMV) including ATAK and ROVER devices with streaming HD digital video of UAV/RPA or targeting pod feeds.

- Integration with simulated military equipment for laser ranging and target designation.

- Support for off-the-shelf devices such as virtual binoculars and VR/XR systems, such as Varjo XR-4. HTC Vive, HP Reverb, and SA Photonics to enhance the fidelity of JTAC training.

- Ability to capture and visualize eye-tracking data in Varjo mixed-reality headsets; depicts the gaze of each eye independently as a color-coded 3D cone; can export this data via DIS as a PDU log for later after-action playback.

- Mature user interface and feature set supporting real-time or after-action review functions.

- Native support for DIS.

- A variety of attachment modes: tether, mimic, orbit, compass, and track.

- Fire lines and shot lines for visualization of engagements.

- Visualization of designator PDUs.

- Savable viewpoints, entity-relative or database-relative.

- Virtual world sound generation.

- Robust 3D model libraries: military and commercial vehicles (air, ground, marine), munitions, military and civilian characters, buildings, trees and foliage, signs, street elements, and other culture.

- Ongoing entity additions in support of Combat Air Force Distributed Mission Operations (CAF DMO) requirements.

- Target recognition training support using screen captures and videos of models placed in VRSG scenes.

- Model Viewer to preview model switch states and articulated parts, and thermal hotspots.

- Game-level interface that enables users to add and manipulate 3D content from the content libraries to build up culture for dense areas of interest and for scripting activities of characters and vehicles on the terrain.

- Ability to create dynamic pattern-of-life scenarios to play back in VRSG.

MVRsimulation's Video Player is a simple, flexible, low-latency player which offers a borderless mode, so it can be embedded easily into cockpit displays. The player can decode and display encoded KLV metadata and is optimized for use with VRSG H.264, H.265, and MPEG2 streaming video.