Physics-Based Real-Time Sensor Simulation

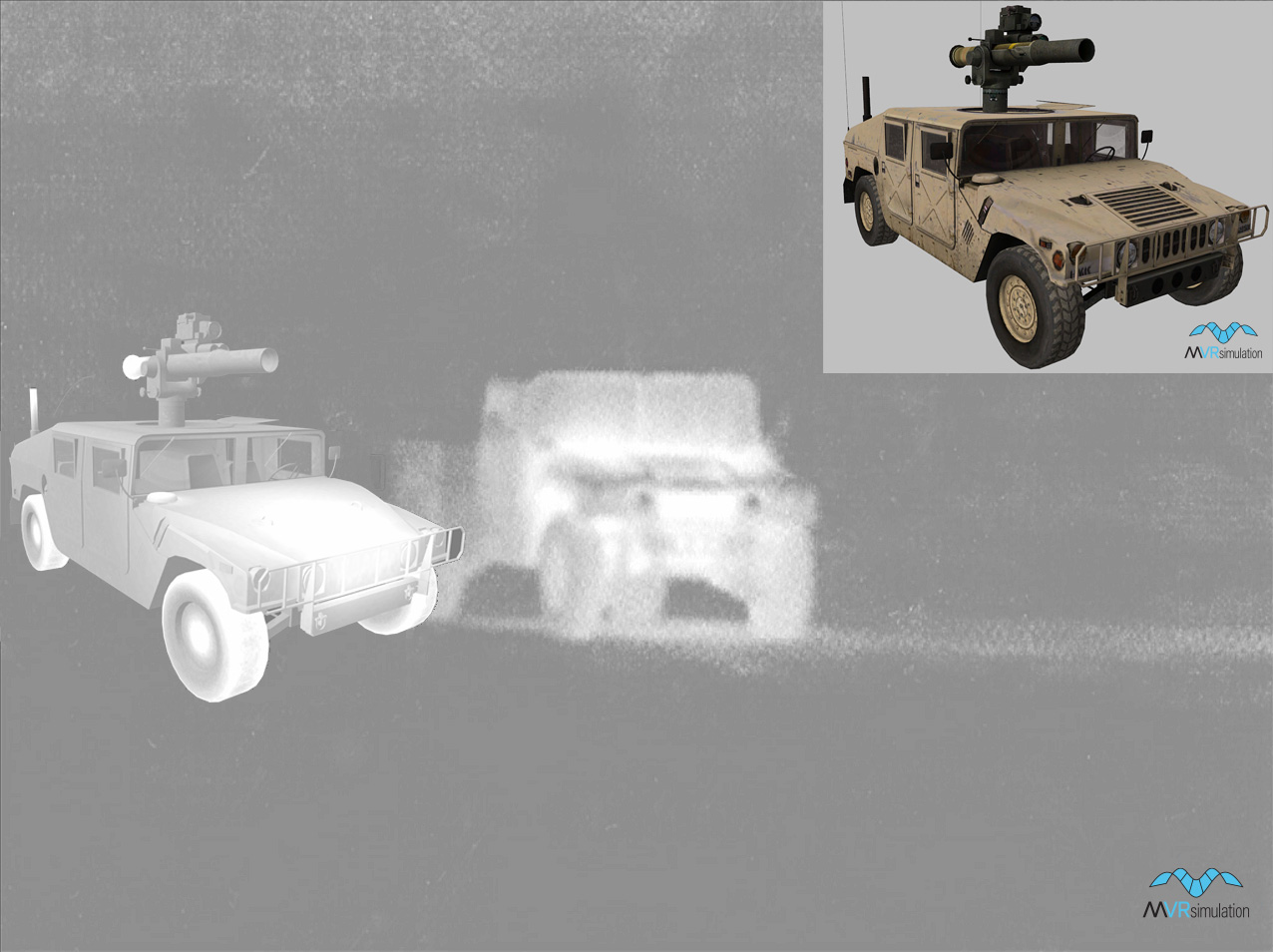

MVRsimulation VRSG provides thermal sensor imagery that portrays the environment of a simulated device with true perspective and real-time responses to the movement of a vehicle and its sensor. Thermal sensors portray relative temperature differences in a given scene and are therefore able to provide information that is not available in the visible spectrum (that is, not available in the "out-the-window" view). The contrast in temperatures aids users in identifying and classifying the features and entities depicted in the image.

VRSG features significant enhancements to the simulation of an infrared (IR) sensor. Working jointly with Technology Service Corporation (TSC), MVRsimulation developed an advanced physics-based IR sensor modeling capability, which is available in the domestic release of VRSG. (Note: International VRSG users require ITAR approval to use this feature.)

The VRSG sensor view is a user controllable image that models two levels of thermal views:

- Physics-based – VRSG's enhanced physics-based IR uses a physics-based model licensed from TSC, in conjunction with IR rendering technology developed by MVRsimulation. This major enhancement features real-time computation of the IR sensor image directly from the visual database, without the need to store a sensor-specific database. This real-time model combines automatic material classification of visual RGB imagery, and a physics-based IR radiance and sensor model.

- Notional – VRSG's high-level sensor controls available on the VRSG Dashboard, enable users to configure the nominal intensities of terrain, cultural elements, and dynamic models. With these controls can control the degree to which dynamic models stand out relative to their terrain background.

VRSG's physics-based IR

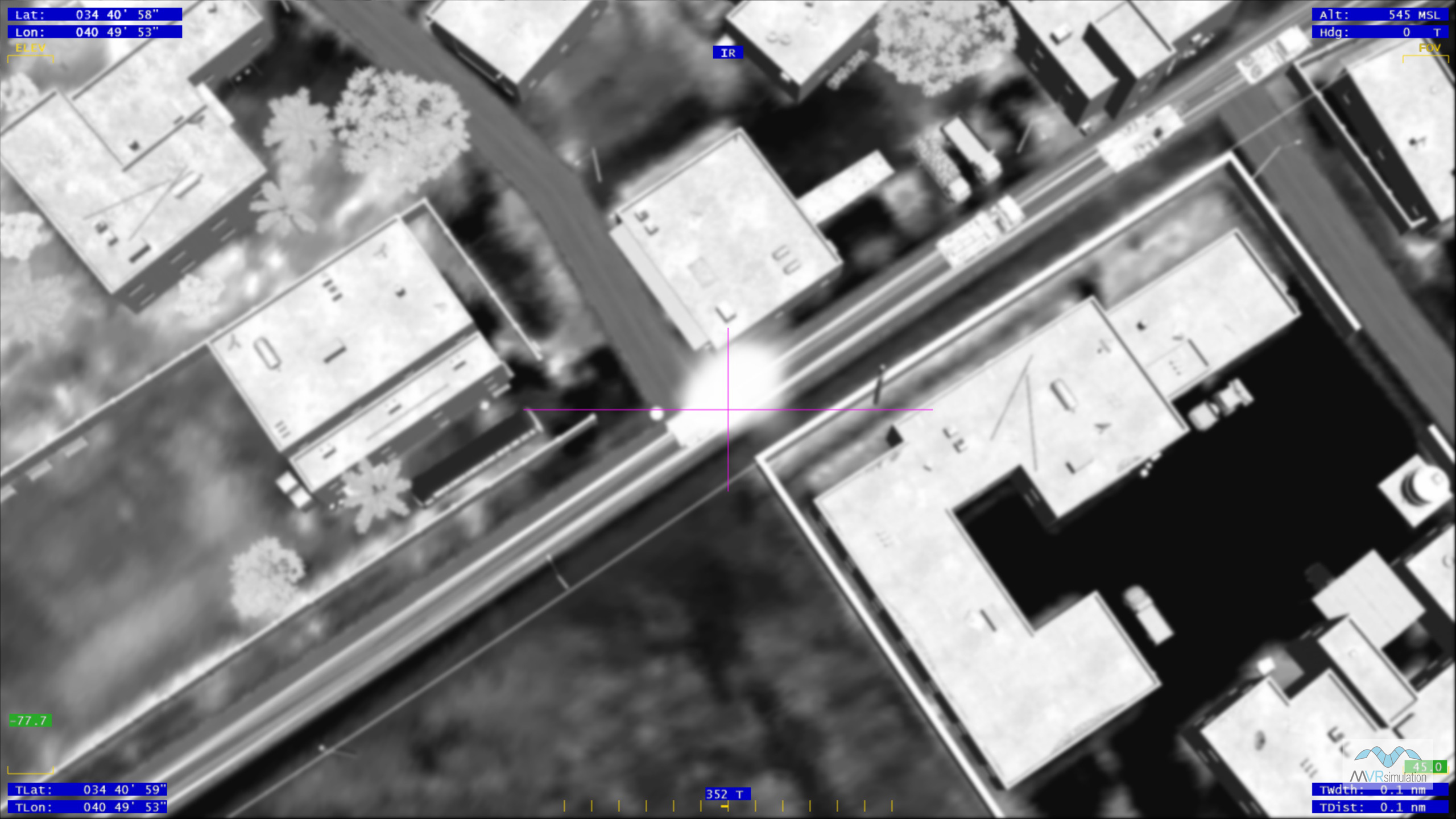

The key feature of VRSG's physics-based IR capability is the real-time computation of the IR sensor image directly from the visual scene, with no off-line precomputation or data storage requirements. This real-time model combines automatic material classification of visual RGB imagery, and a physics-based IR radiance and sensor model.

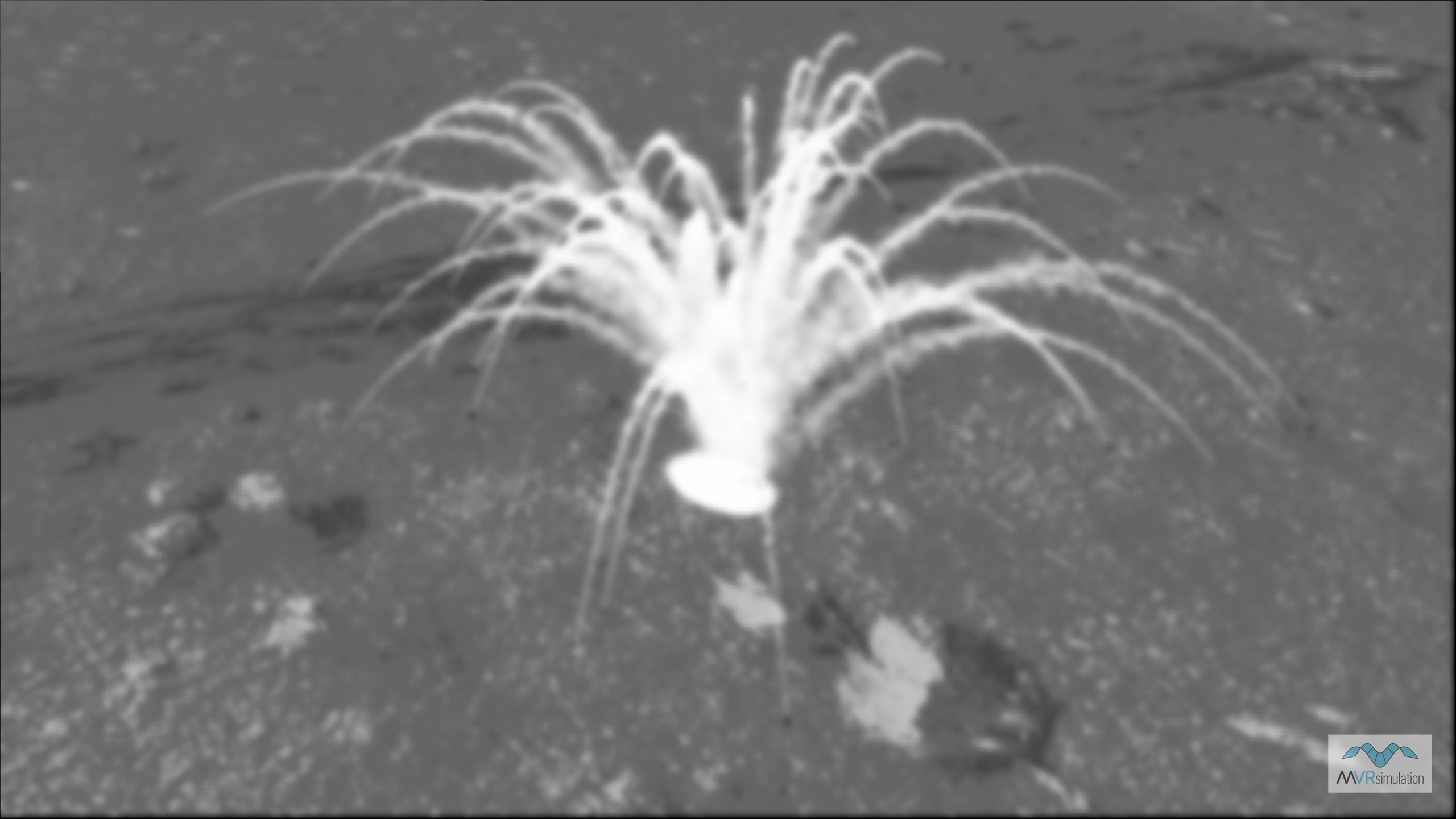

As the resolution of the imagery increases so does the resulting sensor simulation. The following image shows the thermal view of terrain compiled at 2.5 cm resolution using 2.5 cm imagery collected by MVRsimulation's imagery collection small UAV:

VRSG is capable of rendering vast geographic expanses of high-resolution geospecific imagery (such as CONUS++ 3D terrain at 1-meter color resolution). Material classified representations of such large and high-resolution areas are generally not available nor are they practical to acquire. The technology developed by MVRsimulation and TSC solves the classification problem. The process uses pixel-shader technology to convert the per-pixel filtered visual spectrum RGB color into its component materials, which are then used by the physics-based IR model to compute IR radiance and sensor display intensity. The physics-based model takes full account of the local environment, time of day, and sensor characteristics. The result is a physically accurate sensor scene derived from a visual-spectrum database.

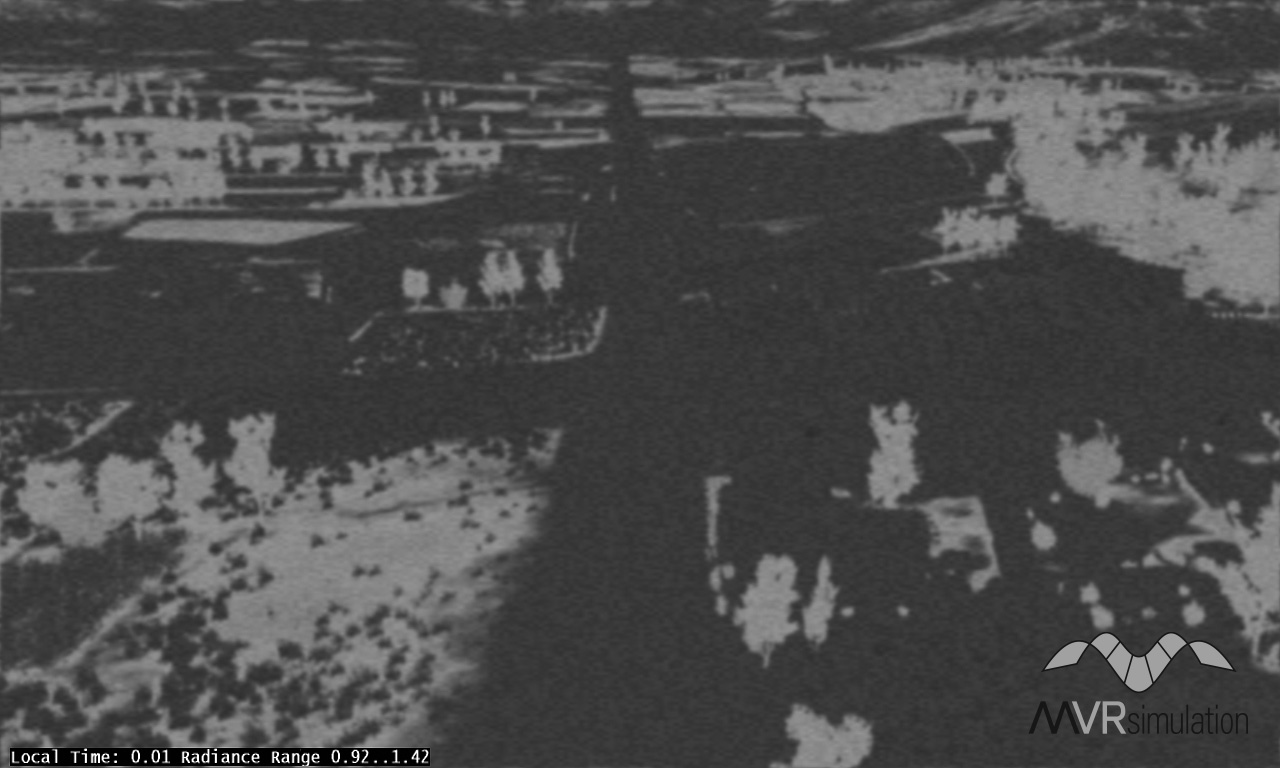

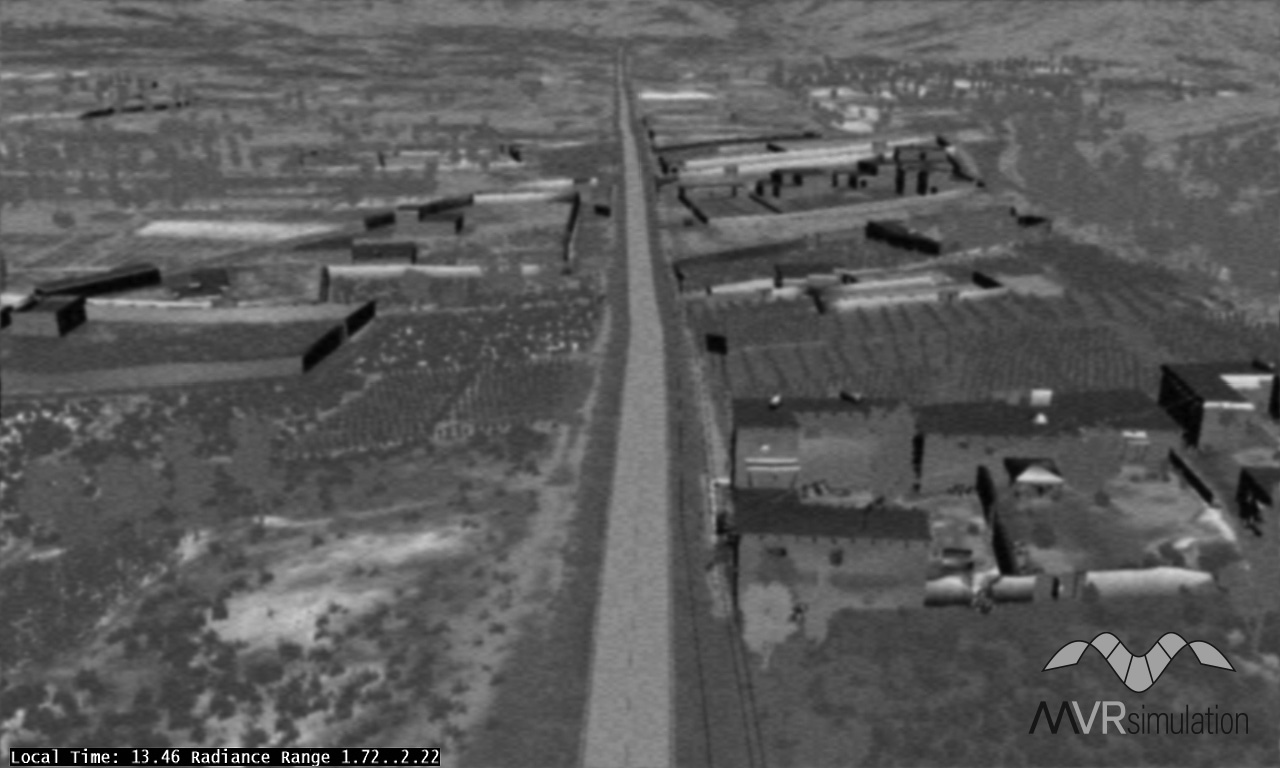

The VRSG images above show a scene on MVRsimulation's Afghan village 3D terrain at midnight and in the early afternoon. Notice in the scene on the left the warmer vegetation and the cooler asphalt road and concrete structures. The cooler 3D structures are difficult to discern as they have cooled to an even temperature and blend into the background. In contrast, the image on the right shows the same area in the afternoon, after the sun has heated up the asphalt road and the stucco sides of the buildings. The vegetation is cooler than the concrete and asphalt structures heated by the sun. This example illustrates thermal inversion.

The only offline processing requires the user to create a classification palette for supervised material classification by associating up to 5 RGB colors with physical materials from the VRSG/RealIR material database. Using the per-pixel material properties and the real-time parameters derived from environmental conditions, a per-pixel radiance raster image is computed for each video frame, as would be seen by an actual thermal sensor.

This radiance image is processed in real-time by including blurring, noise and Automatic Gain Control (AGC). The AGC process maps the radiance range into a display dynamic range, and can be disabled by the user if manual level and gain controls are to be used. The AGC process uses a histogram analysis of the radiance image to determine an appropriate display dynamic range for the scene. (A more efficient implementation of AGC has been implemented in VRSG version 6, which takes advantage of DirectX 11 compute shaders.) Sensor noise is automatically computed as a function of the display’s dynamic range.

Geotypical content, such as 3D culture and moving objects, can be directly mapped to material codes using an external file that associates texture names with material codes. The geospecific and geotypical content use the same underlying physics model, resulting in a consistent rendering of the scene.

VRSG’s notional sensor-view features

VRSG’s notional IR-based simulation requires no material code mapping and offers these capabilities:

- Alternate thermal textures – VRSG switches to a different set of texture maps when operating in IR mode. These texture maps encode the desired signature of the model to include localized dynamic hot spots. VRSG’s military model library and human character library supply pre-built thermal textures for IR mode.

- Dynamic hot spots – Thermal textures can encode hot spots that are dynamically blended in as a function of vehicle activity.

- Selectable contrast – You can control the nominal intensity of the background environment.

- VRSG uses a non-linear atmospheric attenuation function appropriate to infrared.

- IR sensor imagery can be degraded using several built-in sensor post-processing effects, such as noise and AC banding.

The military vehicle models that are delivered in MVRsimulation’s 3D content libraries have built-in IR textures that represent heat signatures or hot spots that respond to some event or other stimulation. As each hot spot is unique, each can be stimulated independently for a thermal response to some vehicle activity.

VRSG's physics-based infrared feature is available in the U.S. domestic release of VRSG. Please contact MVRsimulation for an updated license unlock code to enable this feature.